Webmaster level: Intermediate

Has your site ever dropped suddenly from the index or disappeared mysteriously from search results? Have you ever received a notice that your site is using cloaking techniques? Unfortunately, sometimes a malicious party "hacks" a website: they penetrate the security of a site and insert undesirable content. Sophisticated attackers can camouflage this spammy or dangerous content so that it doesn't appear for normal users, and appears only to Googlebot, which could negatively impact your site in Google's results.

In such cases it used to be very difficult to detect the problem, because the site would appear normal in the eyes of the user. It may be possible that only requests with a User-agent: of Googlebot and coming from Googlebot's IP could see the hidden content. But that's over: with Fetch as Googlebot, the new Labs feature in Webmaster Tools, you can see exactly what Googlebot is seeing, and avoid any kind of cloaking problems. We'll show you how:

Let's imagine that Bob, the administrator of www.example.com, is searching for his site but he finds this instead:

That's strange, because when he looks at the source code of www.example.com, it looks fine:

With much surprise Bob may receive a notice from Google warning him that his site is not complying with Google's quality guidelines. Fortunately he has his site registered with Webmaster Tools, let's see how he can check what Googlebot sees:

First Bob logs into Webmaster Tools and selects www.example.com. The Fetch as Googlebot feature will be at the bottom of the navigation menu, in the Labs section:

The page will contain a field where you can insert the URL to fetch. It can also be left blank to fetch the homepage.

Bob can simply click Fetch and wait a few seconds. After refreshing the page, he can see the status of the fetch request. If it succeeds, he can click on the "Success" link...

...and that will show the details, with the content of the fetched page:

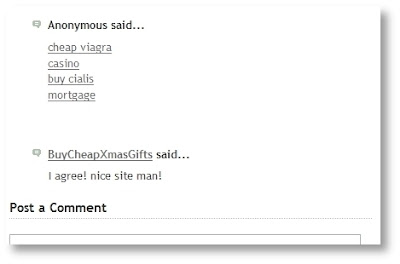

Aha! There's the spammy content! Now Bob can be certain that www.example.com has been hacked.

Confirming that the website has been hacked (and perhaps is still hacked) is an important step. It is, however, only the beginning. For more information, we strongly suggest getting help from your server administrator or hoster and reading our previous blog posts on the subject of hacked sites:

- My site's been hacked - now what?

- A quick security checklist for webmasters

- Message center warnings for hackable sites

- Best practices against hacking

If you have any questions about how to use the Fetch as Googlebot feature, feel free to drop by the Webmaster Help Forum. If you feel that your website might be hacked but are having problems resolving it, you might want to ask the experts in our "Malware and Hacked sites" category.

PS Keep in mind that once you have removed hacked content from your site, it will generally still take time for us to update our search results accordingly. There are a number of factors that affect crawling and indexing of your content so it's impossible to give a time-frame for that.