(Cross-posted from the Google Online Security Blog.)"This site may harm your computer"You may have seen those words in Google search results — but what do they mean? If you click the search result link you get another warning page instead of the website you were expecting. But if the web page was your grandmother's baking blog, you're still confused. Surely your grandmother hasn't been secretly honing her l33t computer hacking skills at night school. Google must have made a mistake and your grandmother's web page is just fine...

I work with the team that helps put the warning in Google's search results, so let me try to explain. The good news is that your grandmother is still kind and

loves turtles. She isn't trying to start a botnet or steal credit card numbers. The bad news is that her website or the server that it runs on probably has a security vulnerability, most likely from some out-of-date software. That vulnerability has been exploited and malicious code has been added to your grandmother's website. It's most likely an invisible script or iframe that pulls content from another website that tries to attack any computer that views the page. If the attack succeeds, then viruses, spyware, key loggers, botnets, and other nasty stuff will get installed.

If you see the warning on a site in Google's search results, it's a good idea to pay attention to it. Google has automatic scanners that are constantly looking for these sorts of web pages. I help build the scanners and continue to be surprised by how accurate they are. There is almost certainly something wrong with the website even if it is run by someone you trust. The automatic scanners make unbiased decisions based on the malicious content of the pages, not the reputation of the webmaster.

Servers are just like your home computer and need constant updating. There are lots of tools that make building a website easy, but each one adds some risk of being exploited. Even if you're diligent and keep all your website components updated, your web host may not be. They control your website's server and may not have installed the most recent OS patches. And it's not just innocent grandmothers that this happens to. There have been warnings on the websites of banks, sports teams, and corporate and government websites.

Uh-oh... I need help!Now that we understand what the malware label means in search results, what do you do if you're a webmaster and Google's scanners have found malware on your site?

There are some resources to help clean things up. The Google Webmaster Central blog has

some tips and a

quick security checklist for webmasters.

Stopbadware.org has great information, and their

forums have a number of helpful and knowledgeable volunteers who may be able to help (sometimes I'm one of them). You can also use the Google SafeBrowsing diagnostics page for your site (http://www.google.com/safebrowsing/diagnostic?site=

<site-name-here>) to see specific information about what Google's automatic scanners have found. If your site has been flagged, Google's

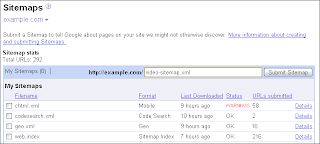

Webmaster Tools lists some of the URLs that were scanned and found to be infected.

Once you've cleaned up your website, use Google's

Webmaster Tools to

request a malware review. The automatic systems will rescan your website and the warning will be removed if the malware is gone.

Advance warningI often hear webmasters asking Google for advance warning before a malware label is put on their website. When the label is applied, Google usually

emails the website owners and then posts a warning in Google's

Webmaster Tools. But no warning is given ahead of time -

before the label is applied - so a webmaster can't quickly clean up the site before a warning is applied.

But, look at the situation from the user's point of view. As a user, I'd be pretty annoyed if Google sent me to a site it knew was dangerous. Even a short delay would expose some users to that risk, and it doesn't seem justified. I know it's frustrating for a webmaster to see a malware label on their website. But, ultimately, protecting users against malware makes the internet a safer place and everyone benefits, both webmasters and users.

Google's

Webmaster Tools has started a test to provide

warnings to webmasters that their server software may be vulnerable. Responding to that warning and updating server software can prevent your website from being compromised with malware. The best way to avoid a malware label is to never have any malware on the site!

ReviewsYou can request a review via Google's

Webmaster Tools and you can see the status of the review there. If you think the review is taking too long, make sure to check the status. Finding all the malware on a site is difficult and the automated scanners are far more accurate than humans. The scanners may have found something you've missed and the review may have failed. If your site has a malware label, Google's

Webmaster Tools will also list some sample URLs that have problems. This is not a full list of all of the problem URLs (because that's often very, very long), but it should get you started.

Finally, don't confuse a malware review with a

request for reconsideration. If Google's automated scanners find malware on your website, the site will usually not be removed from search results. There is also a different process that removes spammy websites from Google search results. If that's happened and you disagree with Google, you should submit a

reconsideration request. But if your site has a malware label, a reconsideration request won't do any good — for malware you need to file a malware review from the Overview page.

How long will a review take?

How long will a review take?Webmasters are eager to have a Google malware label removed from their site and often ask how long a review of the site will take. Both the original scanning and the review process are fully automated. The systems analyze large portions of the internet, which is big place, so the review may not happen immediately. Ideally, the label will be removed within a few hours. At its longest, the process should take a day or so.

Written by Oliver Fisher, Software Engineer, Anti-Malware Team