Q: How do I increase the visibility of my site in search results?

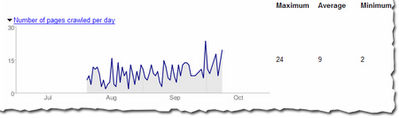

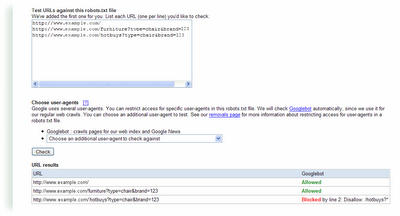

A: There are many factors that can impact visibility of your site in search results. We outlined just a few tips that can make a big difference to increasing your site's visibility in Google search results. First, you should ensure your site has quality content that is unique. Second, have quality sites link to your site. Third, submit a Sitemap to let us know about all the URLs on your site. Fourth, sign up for a webmaster tools account to get information how about Google sees your site, such as crawl errors, indexing details, and top queries to your site. Lastly, you can visit Webmaster Central and Webmaster Help Center for more webmaster related questions and resources.

Q How much do I have to pay to create a Google Custom Search Engine?

A: Nothing -- it's free. In addition to being able to create your own custom search engine on your site, you can make money on your site using AdSense for Search.

Q: Why is it better to create gadgets rather than create feeds?

A: First, gadgets are much more flexible. As a publisher, you control the format of your content. Second, gadgets are by nature more interactive. They can be built with flash, HTML or AJAX, and are generally much more interesting than feeds. Finally, your users can customize a gadget to their liking, making your content a lot more targeted.

Q: What is this new ad placement feature for AdSense and how come I don't see it in my account?

A: Ad placements are publisher-defined groups of ad units that advertisers will see when searching for places where they can target their ads. If you don't yet see it in your AdSense account, it's because we've been slowly rolling out this feature to everyone. This exciting feature will be available to all publishers in the next few weeks, so be sure to keep an eye out.

Q: What's the easiest way to put a searchable Google Map on my web page?

A: Use the Map Search Wizard to design a Google Map for your page. The wizard will write all of the code for you; all you need to do is copy and paste the code into your web page, and your users will see your location on a map.

For more information about Google products for webmasters, you can check them out here:

We also wanted to share some photos from PubCon. If you look closely enough, you may be able to see yourself.

Thanks for stopping by, on behalf of the 25 Googlers in attendance!