While hanging out at the Google booth, I was often asked about how to take advantage of our webmaster tools. For example, here's one tip on Common Words.

Common Words: Our prioritized listing of your site's content

The common words feature lists in order of priority (from highest to lowest) the prevalent words we've found in your site, and in links to your site. (This information isn't available for subdirectories or subdomains.) Here are the steps to leveraging common words:

1. Determine your website's key concepts. If it offers getaways to a cattle ranch in Wyoming, the key concepts may be "cattle ranch," "horseback riding," and "Wyoming."

2. Verify that Google detected the same phrases you believe are of high importance. Login to webmaster tools, select your verified site, and choose Page analysis from the Statistics tab. Here, under "Common words in your site's content," we list the phrases detected from your site's content in order of prevalence. Do the common words lack any concepts you believe are important? Are they listing phrases that have little direct relevance to your site?

2a. If you're missing important phrases, you should first review your content. Do you have solid, textual information that explains and relates to the key concepts of your site? If in the cattle-ranch example, "horseback riding" was absent from common words, you may then want to review the "activities" page of the site. Does it include mostly images, or only list a schedule of riding lessons, rather than conceptually relevant information?

It may sound obvious, but if you want to rank for a certain set of keywords, but we don't even see those keyword phrases on your website, then ranking for those phrases will be difficult.

2b. When you see general, non-illustrative common words that don't relate helpfully to your site's content (e.g. a top listing of "driving directions" or "contact us"), then it may be beneficial to increase the ratio of relevant content on your site. (Although don't be too worried if you see a few of these common words, as long as you also see words that are relevant to your main topics.) In the cattle ranch example, you would give visitors "driving directions" and "contact us" information. However, if these general, non-illustrative terms surface as the highest-rated common words, or the entire list of common words is only these types of terms, then Google (and likely other search engines) could not find enough "meaty" content.

2c. If you find that many of the common words still don't relate to your site, check out our blog post on unexpected common words.

3. Here are a few of our favorite posts on improving your site's content:

Target visitors or search engines?

Improving your site's indexing and ranking

NEW! SES Chicago - Using Images

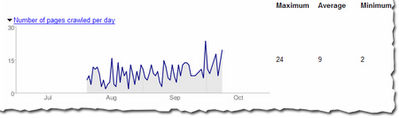

4. Should you decide to update your content, please keep in mind that we will need to recrawl your site in order to recognize changes, and that this may take time. Of course, you can notify us of modifications by submitting a Sitemap.

Happy holidays from all of us on the Webmaster Central team!

|

| SES Chicago: Googlers Trevor Foucher, Adam Lasnik and Jonathan Simon |